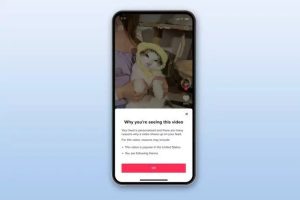

TikTok has tightened its algorithm for detecting explicit content. The social media company would now be even better able to detect so-called ‘suggestive content’, which is not suitable for minors, but also does not violate policy.

Thanks to the improvements to the algorithm, young people would come into contact with the so-called ‘suggestive’ or ‘borderline content’ even less and the social network should become a safer place for young people, according to them.

The so-called ‘borderline content’ can be sexual or violent. This type of content is not suitable for underage TikTok users, but also does not violate the rules of the platform because it is sometimes not explicit enough to be removed from the platform.

Since the introduction of the so-called Content Levels rating system earlier this year , this type of TikTok videos is no longer provided to young people aged 13 to 17 via recommendations or search results. The social media company also says it is working with aid organization StopNCII, Meta and Bumble to stop the spread of explicit images on the platform.